I have worked on a variety of autonomous ground and aerial vehicles. Some of them worked better than others, but they've all had their own quirks and have been a blast to work with.

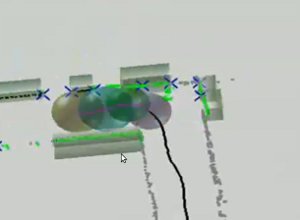

Robbie 9 was a racing robot built at the University of Koblenz-Landau to compete in the SICK Robot Day 2007. I worked on creating a simulator duplicating the robot's physical characteristics, and was a co-developer of the primary navigation algorithm.

Robbie 9 was a racing robot built at the University of Koblenz-Landau to compete in the SICK Robot Day 2007. I worked on creating a simulator duplicating the robot's physical characteristics, and was a co-developer of the primary navigation algorithm.

The Rovers are a pair of autonomous ground vehicles which I designed, built and conducted research on as part of a research team at Iowa State University. The vehicles were designed from the ground up as research testbeds, and thus sported a robust and flexible architecture, from the heavy-duty construction, to the modular C++ onboard software architecture.

The Rovers are a pair of autonomous ground vehicles which I designed, built and conducted research on as part of a research team at Iowa State University. The vehicles were designed from the ground up as research testbeds, and thus sported a robust and flexible architecture, from the heavy-duty construction, to the modular C++ onboard software architecture.

The Rovers proved their versatility when they were put to use as part of Iowa State University's 2008 entry into the University Mars Rover Challenge, where they were teleoperated in the harsh environment of the Utah desert.

The Rovers proved their versatility when they were put to use as part of Iowa State University's 2008 entry into the University Mars Rover Challenge, where they were teleoperated in the harsh environment of the Utah desert.

The Rovers' primary function was testing of obstacle avoidance, indoor navigation and mapping algorithms. A range of new algorithms were developed on these testbeds, and multiple papers were published based on the results.

The Rovers' primary function was testing of obstacle avoidance, indoor navigation and mapping algorithms. A range of new algorithms were developed on these testbeds, and multiple papers were published based on the results.

I've also worked with a handful of autonomous helicopter projects in varying capacities. I was the team leader for a Iowa State University Department of Computer and Electrical Engineering project to develop an autonomous helicopter for the International Aerial Robotics Competition. I worked on everything from sensor integration to control algorithms, while coordinating a team of twelve to eighteen graduating seniors.

Back in the Department of Aerospace Engineering I assisted in the development of a micro-sized autonomous helicopter based on a T-REX platform and the MicroPilot autopilot. Here I worked in a general assistance capability, helping to build airframes, modify systems, communicate with vendors and support the flight test program.

Back in the Department of Aerospace Engineering I assisted in the development of a micro-sized autonomous helicopter based on a T-REX platform and the MicroPilot autopilot. Here I worked in a general assistance capability, helping to build airframes, modify systems, communicate with vendors and support the flight test program.

Most recently, I've been working at the German Aerospace Center, where I develop reactive obstacle avoidance systems for the three helicopters of the ARTIS project.

For a detailed look at the projects I've worked on and the skills I've utilized, have a look at my resume.